How To Create vSphere Storage DRS Datastore Clusters in 9 Easy Steps

Storage DRS can alleviated the problems associated with VM provisioning and storage environment monitoring. Here are the steps to create them.

Storage DRS is a new feature introduced in vSphere 5.0 that helps in preventing these problems. It provides smart virtual machine placement and load balancing mechanisms based on I/O and space capacity. Storage DRS will help decrease operational effort associated with the provisioning of virtual machines and the monitoring of the storage environment. The first step to enabling Storage DRS is creating a datastore cluster:

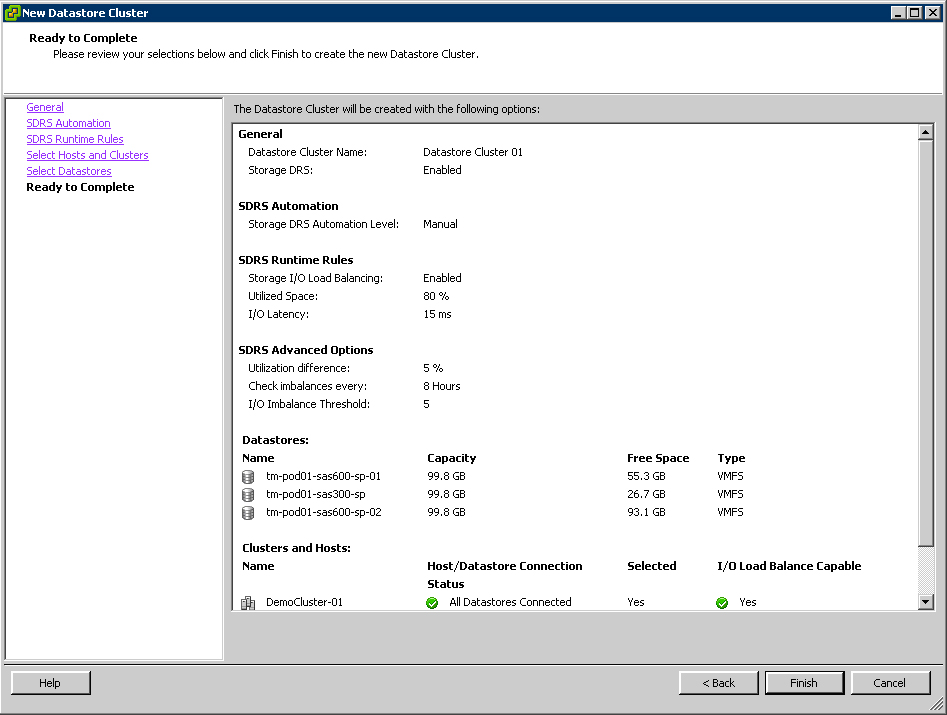

1. From the vCenter Home view, select Datastores and Datastore Clusters.

|

| Figure 1. Select Datastores and Datastore Clusters. (Click image to view larger version.) |

|

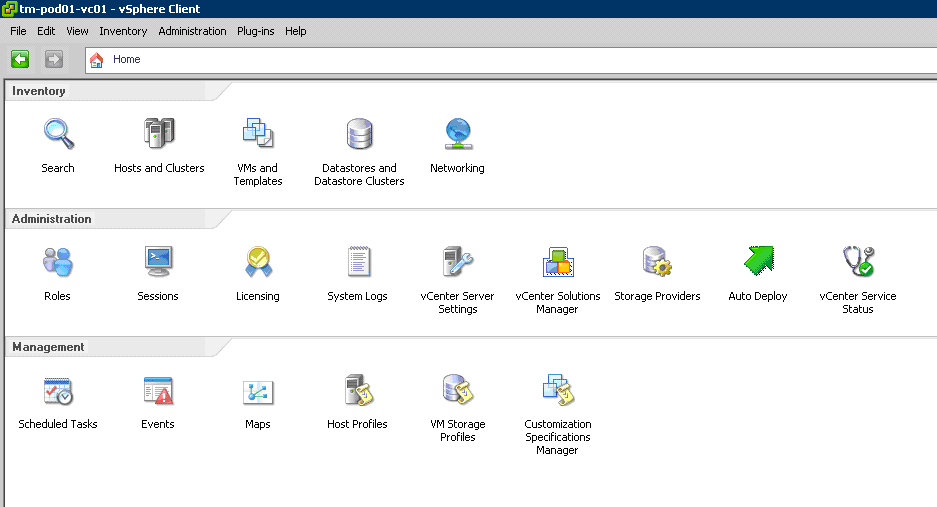

| Figure 2. Create a New Datastore Cluster. |

|

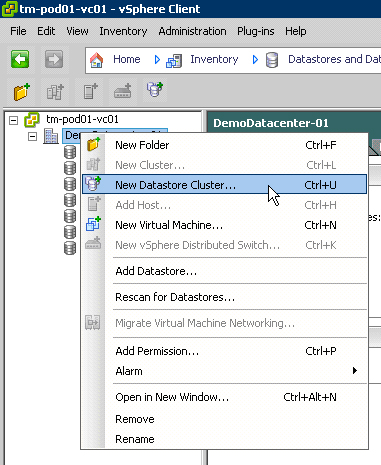

| Figure 3. Name your new Datastore Cluster. (Click image to view larger version.) |

|

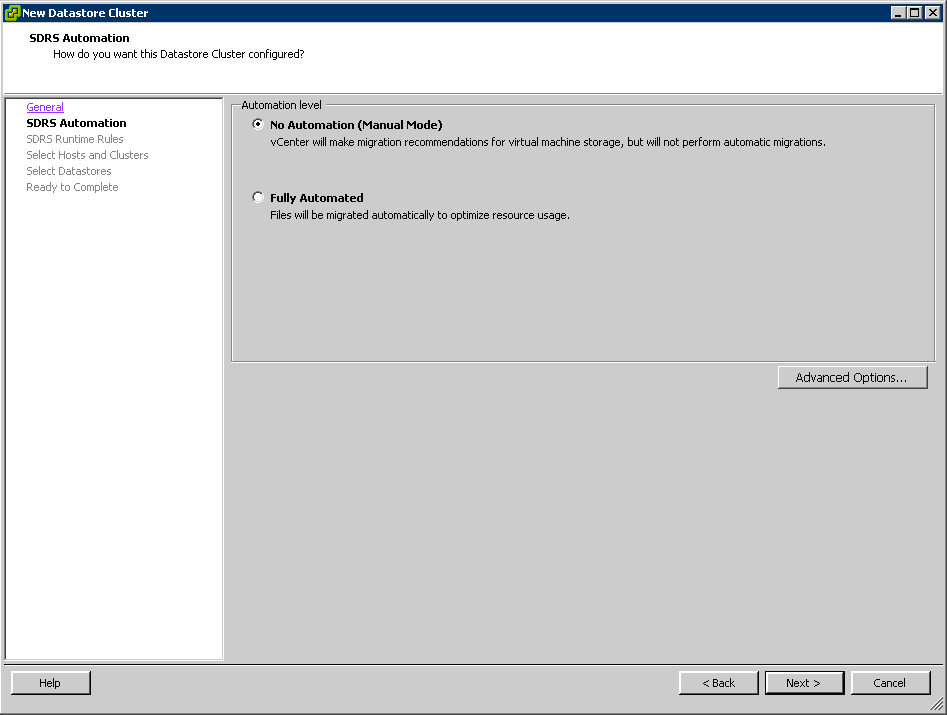

| Figure 4. Select the No Automation option. (Click image to view larger version.) |

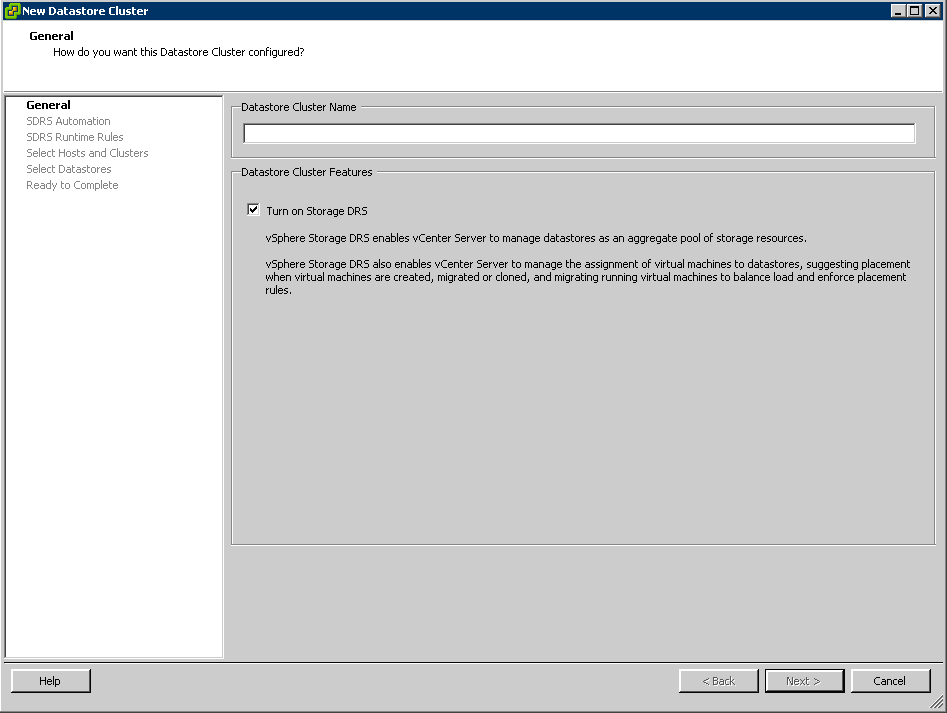

Similar to the early days of DRS, start off with manual mode, review the recommendations provided by Storage DRS and as you start to feel comfortable with the recommendations, consider setting Storage DRS to Fully Automated. Changing this setting can be done any time, providing the ability to switch back and forth.

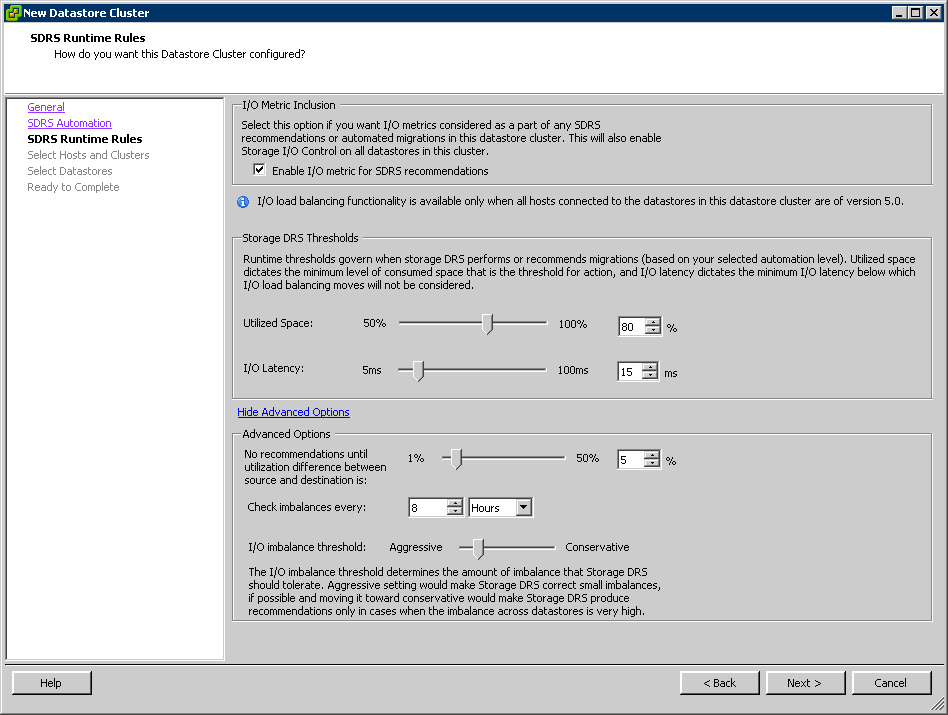

5. Click on Show Advanced Options.

|

| Figure 5. Select Show Advanced Options for more configuration options. (Click image to view larger version.) |

The utilization difference is the minimal difference between the source and the destination. Based on this value, Storage DRS will filter out those datastores whose utilization difference is below the given threshold during the selection of a destination. The default is set to 5%.

The aggressiveness factor determines the amount of I/O imbalance Storage DRS should tolerate. The invocation period, by default 8 hours, determines how often Storage DRS will evaluate the environment and possibly generate recommendations.

Review the space utilization threshold and understand how this percentage will affect storage reserved per datastore. The default recommendation is 20%; however, this might be too much if large datastore sizes are used or too low if small datastores are present. Tuning will depend on how much storage you feel you need to reserve to allow for normal provisioning to take place while you add storage.

6. Review default settings and click Next. Note that Storage DRS enables Storage I/O Control automatically when "I/O Metric" is enabled.

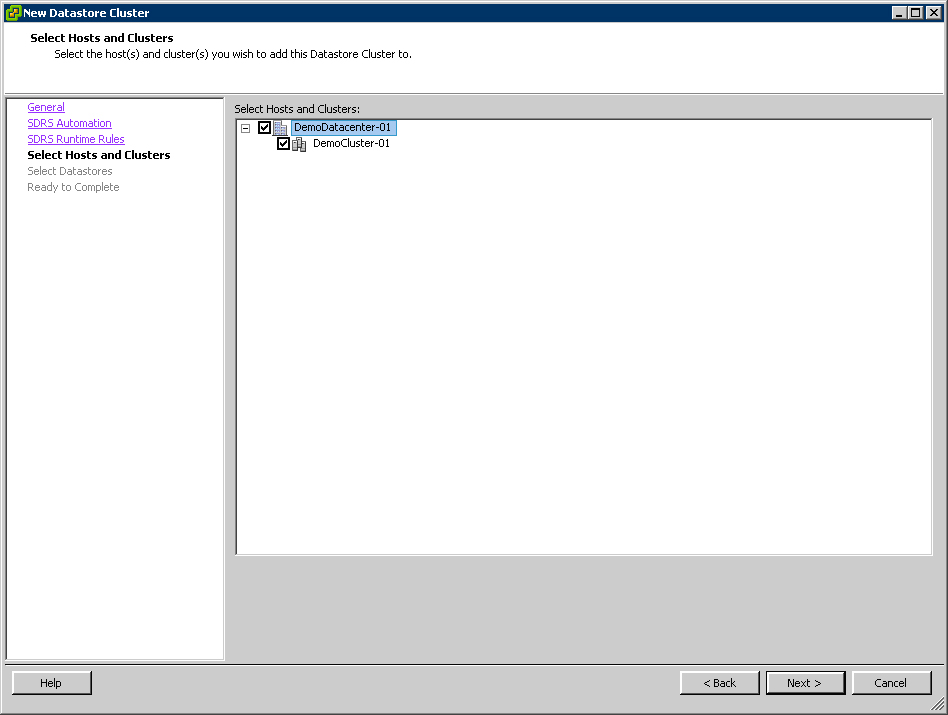

7. Select the cluster to which you want to add this Datastore Cluster.

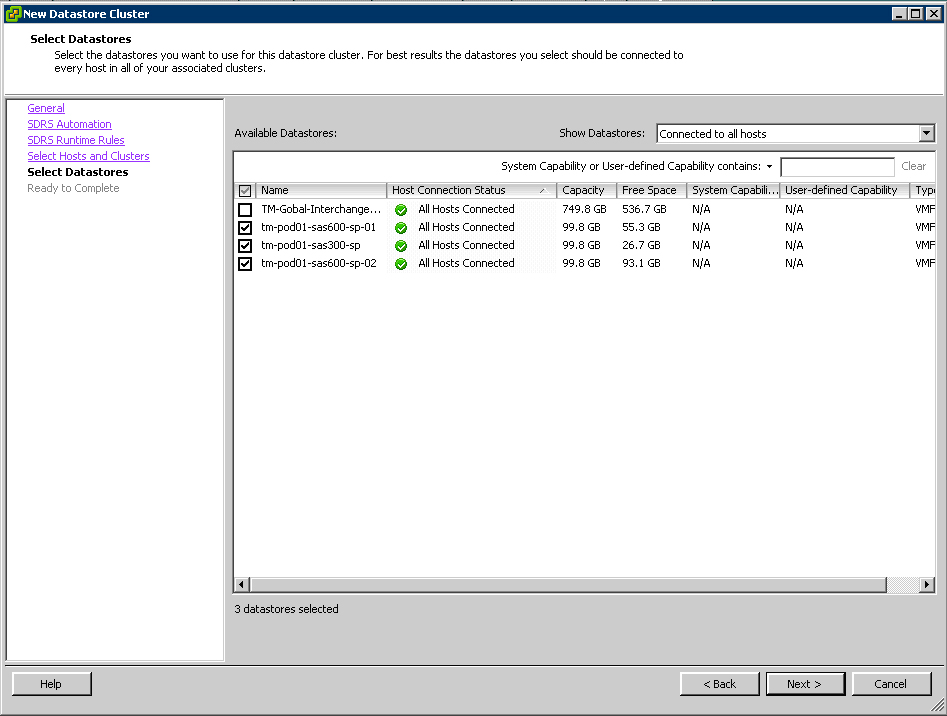

| Figure 6. Select a cluster that you'll add to the newly created Datastore Cluster. (Click image to view larger version.) 8. Select the datastores which should be part of this Datastore Cluster.

We are using datastores which already contain virtual machines. Creating a datastore cluster is a non-disruptive task and can be done if needed during production hours. Select datastores that are connected to all hosts inside the compute cluster, as this provides the best load balancing options on both compute cluster and datastore cluster levels. The host connection status column helps to identify any current connectivity inconsistencies in your compute cluster. 9. Review your selections, ensure all hosts are connected to the datastores in the datastore cluster and click Finish

The datastore cluster will now be created and a new object should appear on the "Datastores and Datastore Clusters" view. This object should contain the selected datastores. |